Neuromorphic Space Imaging

Making space safer with biology-inspired vision sensors

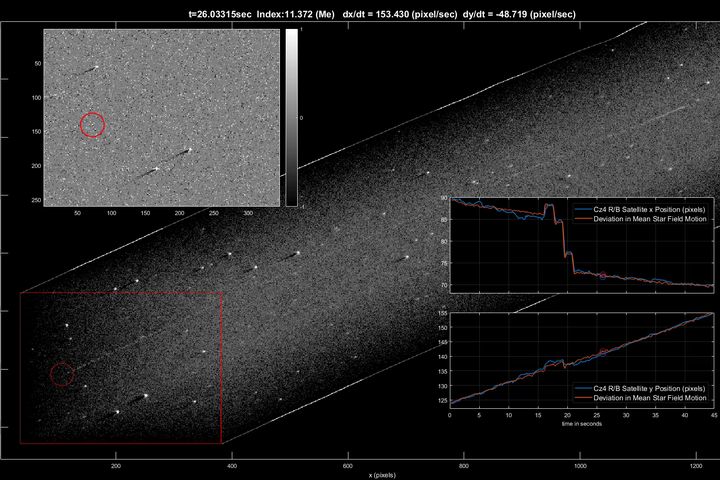

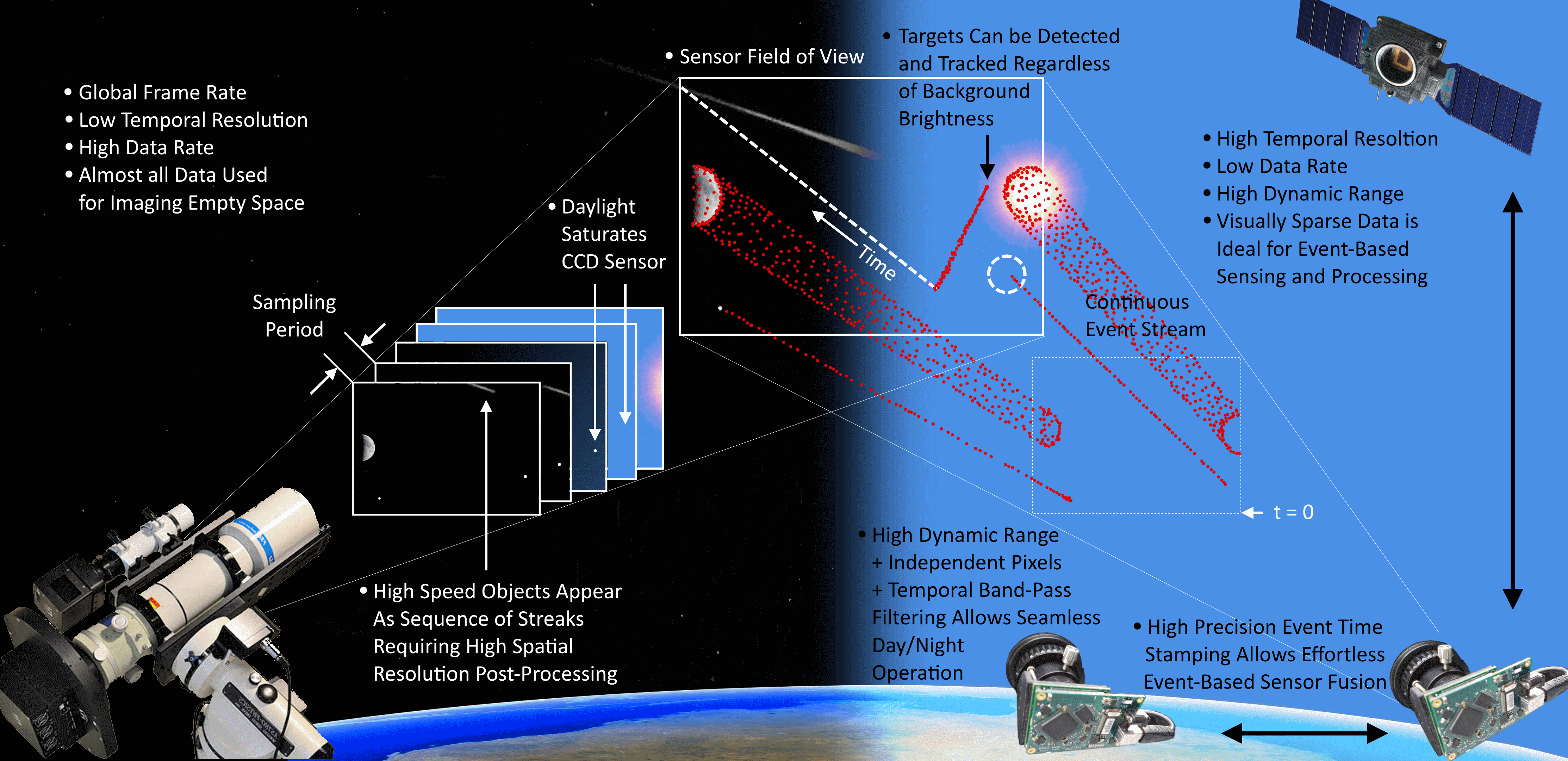

Overview of neuromorphic space imaging (image credit: Saeed Afshar)

Overview of neuromorphic space imaging (image credit: Saeed Afshar)

Neuromorphic Vision Sensors for Astronomy and Space Situational Awareness

Space clutter has become an increasing problem for both satellite and crewed space missions, with the likelihood and incidents of collisions continually increasing. This has created an immediate need for better means of detecting and tracking objects in space. At Western Sydney University, we have been working with dynamic vision sensors and neuromorphic algorithms to build novel systems for terrestrial and orbital space imaging applications. Through telescope field trials with biology-inspired neuromorphic cameras, we have demonstrated the ability to detect both space junk and satellites during both day time and night time observations using an identical optical telescope configuration. Additionally, we have also demonstrated exciting capabilities in daytime star tracking, high-speed object detection, and for use in high-speed and dynamic adaptive optics.

The neuromorphic sensing approach used in this work offers a different paradigm in which to do imaging and has exciting potential for high-speed, low-power imaging applications. Neuromorphic engineering seeks to use ideas and principles from biology to create silicon hardware and algorithms to try and achieve the power efficiency, robustness, and speed of biology. At the International Centre for Neuromorphic Systems (ICNS) at Western Sydney University, we apply these neuromorphic principles and devices to tackle real-world problems.

Our unique application of neuromorphic sensing to space imaging leverages the radically different sensing paradigm offered by these sensors, allowing us to demonstrate capabilities not currently possible using conventional astronomy cameras. Key among these capabilities is the ability to detect and track satellites at high speed – a critical requirement for increasing our ability to track and predict the motion of satellites and space debris. Our results have confirmed that the devices can observe such resident space objects (RSOs) from low-earth orbit (LEO) through to geostationary orbital (GEO) regimes. Significantly, observations of these RSOs were made during both day-time and night-time (terminator) conditions without modification to the camera or optics.

Making use of the independent and asynchronous nature of the pixels in these sensors, the ability to image stars and satellites during day-time hours offers a dramatic capability increase for terrestrial optical sensors. Additionally, we have shown that these sensors actually perform better under motion, and that the high temporal resolution of these sensors provides unique capabilities far in excess of those expected from the spatial resolution of the sensor alone.

Because of their low power consumption and built-in data compression, there is also significant interest in using these sensors on orbital platforms. My talk will discuss our latest results from terrestrial imaging with event-based sensors and introduce our projects to explore the use of these devices from orbit. In addition, I will introduce and showcase some applications of this technology to other tasks, such as applications in hypersonic detection, adaptive optics, and in seeing through obstructions.

Featured Tweet

Did you know there are more than 23,000 pieces of debris in Earth’s orbit? This is a problem as debris can damage spacecraft & satellites. Today the Chief Scientist is @westernsydneyu learning about how neuromorphic computing is applied to detect space debris & track satellites🛰️ pic.twitter.com/x7mP85y6VH

— Chief Scientist (@ScienceChiefAu) January 28, 2020

Media Coverage

- Future Makers: Volume 2: Focusing on Space Junk

- Plan Jericho At the Edge: Neuromorphic Sensors Enhance Event-Based Situational Awareness

- Australian Defence Magazine: Space tracking investment increased