Adaptive Optics with Neuromorphic Sensors

Exploring the use of Event Based Imaging Sensors on Turbulence Characterisation and Adaptive Optics

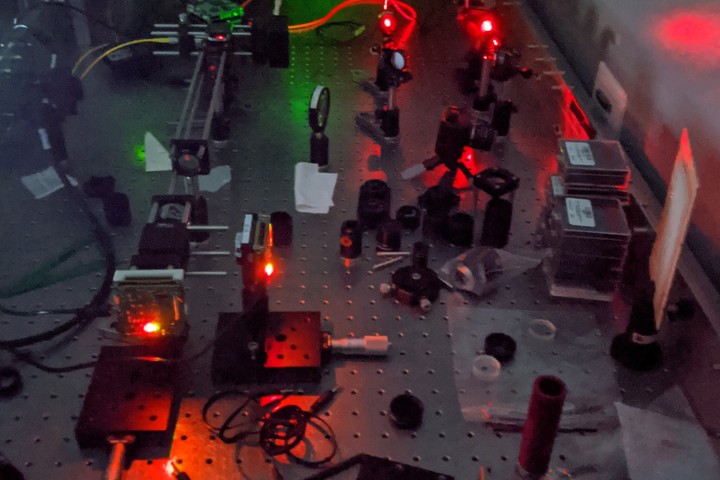

An experimental wavefront sensor using two event-based cameras

An experimental wavefront sensor using two event-based cameras

The objective of this research is to investigate the potential and applications of event-based neuromorphic cameras for adaptive optic systems, specifically for use in small and large telescope configurations. These devices offer the potential for high-speed adaptive optic corrections using conventional equipment configurations and may also allow for new and innovative approaches to adaptive optics that make use of the spatiotemporal output of the cameras.

Neuromorphic cameras contain specialized pixels that only generate data in response to changes in log-illumination around a setpoint that is variable for each independent pixel. The data is emitted in an asynchronous fashion, and only from pixels that detect a change of a specific magnitude controlled by a variable threshold on the device. This results in a lower power sensor that produces far less data than a conventional imaging device, and additionally allows for high-speed processing of events from the camera. Recent applications in space imaging have demonstrated the applicability of these cameras for space observing tasks through conventional optical telescopes. This work will build on that success to investigate the applications and potential uses for these cameras for adaptive optics tasks.

The research project aims to achieve the following objectives:

-

Develop hardware, software, and algorithms to integrate an event-based camera into an existing adaptive optics configuration.

-

Explore prime-focus telescope optics, including evaluating the evolution of the Point Spread Function (PSD) directly. Physical parameters of the turbulence in the optical path will be determined from the signal processing of the event-based sensor output.

-

Explore the event based sensor in a plane ajar from the pupil of the telescope as in traditional curvature wavefront sensing, but unlike traditional systems that require two planes of the system to be imaged, we will explore the spatio-temporal variation at one plane only to extract the same information about the aberrations.

-

Install and test the sensor as a part of a small amateur class telescope AO system.

We expect this research to lead to the development of a novel and high-speed adaptive optics system capable of operating at speeds well above conventional and existing equipment. Through leveraging the unique characteristics of event-based sensors, the project will attempt to develop new methods of performing adaptive optics tasks and estimating parameters of turbulence.

Conference Presentations and Publications

-

A Lambert and G Cohen. “Study of a spatio-temporal sensor for turbulence characterisation and wavefront sensing (Conference Presentation)". Proc. SPIE 10787, Environmental Effects on Light Propagation and Adaptive Systems, 1078702 (11 October 2018); https://doi.org/10.1117/12.2326795

-

F. Kong, G. Cohen, and A. Lambert, “Using event-based optical flow to determine the Shack-Hartmann spot displacements,” in Imaging and Applied Optics 2019 (COSI, IS, MATH, pcAOP), OSA Technical Digest (Optical Society of America, 2019), paper PW4C.3.

-

F. Kong, A. Lambert, and G. Cohen. Shack-Hartmann wavefront sensing using spatial-temporal data from event-based sensor.In XII Workshop on Adaptive Optics for Industry and Medicine, Oct. 2019